Next week will mark the anniversary of the Neurocomic project. Over the year, 53 materials have been published within this project, all being completely disinformational, containing elements of hate speech, insults to foreign statesmen, and political opponents to the current regime.

The project began to be published on the pages of “Narodnaya Gazeta” in April 2023, then at the end of June it moved to the pages of the weekly issue “SB Belarus Today”, which is published in full color with a claimed circulation of 400,000 copies.

The Neurocomic project originated in partnership with “Narodnaya Gazeta” (state-owned), the toxic Telegram channel “Ludazhory”, namely the chief editor of “Narodnaya Gazeta” Dmitry Kryat and publicist Yuri Terekh. Yuri Terekh was positioned three years ago as a blogger and entrepreneur:

The media holding in June 2023 recommended regional state publications use neural networks in their work.

An analysis of all Neurocomic publication headlines for disinformation narratives revealed:

- Sensationalism and emotional coloring: The headlines contain emotionally colored words and phrases, for example, “Interpretation of a filthy occupation”, “not mine – not sorry”, “arms baron and drug dealer”. Such language is typically used to manipulate the reader’s emotions and draw attention to the article.

- Insults and stereotypes: The use of derogatory terms such as “chain dogs”, “serf”, “drug dealer”, “duo of scorched hypocrites” may indicate an attempt to discredit certain individuals or groups.

- Conspiracy theories: Headlines hint at hidden motives or secret operations without providing evidence, as in the case of “national security threat” or “external governance of Ukraine by the States”.

- Exaggeration and dramatization: Phrases like “guillotine”, “last Ukrainian”, “steep dive” create an exaggerated or dramatized narrative that may distort reality.

- Manipulative statements: Headlines, such as “Is there life after sanctions for those who invented them”, present opinions as facts, which can mislead and distort the understanding of actual events.

- Accusations without evidence: Headlines suggest facts of guilt, as in “how corrupt officials sniffed with corrupt officials”, but do not provide confirmation of such statements.

- Attack on personalities: Mentioning specific individuals with negative connotations, such as “Macron and his Napoleon complex”, can serve as an indication of an attempt at personal discredit.

Overall, such headlines are usually designed to affect emotions, bias, and opinions of readers, often using manipulative and disinformation techniques to shape a certain perception of events or people.

Thematic categorization of publications by direction of disinformation attacks:

- Ukraine 12

- Belarusian opposition 11

- Western democracies 9

- Poland 7

- USA 5

- France 4

- Lithuania 2

- Climate change 2

One publication each about Serbia, Italy, Germany, Japan, Canada, Moldova. Some publications covered two topics simultaneously.

Example of a recent publication about Ukraine and personally the President of Ukraine – Volodymyr Zelensky:

Let’s analyze the accompanying text of this comic for disinformation narratives:

The text contains several bright indicators of disinformation narratives, which can be used to manipulate public opinion:

- Sensationalism and unusualness of statements: Mention of the “white powder” on President Zelensky’s table and his connection with the “drug mafia” is aimed at attracting attention and creating a sensational impression. This technique is often used in disinformation campaigns to enhance the emotional impact on the reader.

- Lack of reliable sources: Although it is mentioned that the article is based on an “epic journalistic investigation”, no specific evidence or links to reliable sources are provided in the text. The phrase “convincing evidence” is not supported by actual content or links, which raises doubts about the authenticity of the information.

- Use of loaded language and emotional expressions: Descriptions such as “spicy turn”, “dubious substances”, and “deal of the century” are intended to enhance the emotional perception of the message and can mislead the reader regarding the reality of the facts presented.

- Anonymity and unverified claims: Mentioning Interpol-intercepted phone negotiations without additional details or the possibility of verification is also an indicator of potential disinformation.

- Bias and stereotypes: The phrase “in our latitudes, where it has long been known that anything can be expected from Mr. Z” indicates the use of biased judgments and stereotypes to form the reader’s opinion, which is a common method of disinformation.

- Conspiracy theories and speculations: Drawing attention to the “neural network”, which proposed its version of the “deal of the century”, adds an element of conspiracy theory, fueling interest and speculation without providing real evidence.

These elements signal potential disinformation in the text and require critical analysis and additional verification before making any conclusions or spreading information further.

Using the WeVerify tool, all possible distortions in the image of the President of the Republic of Moldova, who actually looks like this, were analyzed:

As it can be seen, this publication is aimed at creating a negative image of Moldova, the Republic of Poland, and the European Union as a whole. Additionally, it demonstrates illegal actions.

In summary, it can be noted that the researched project is a full-fledged example of using the amazing capabilities of artificial intelligence for full-scale generation of disinformation publications and images.

Their dangers are as follows:

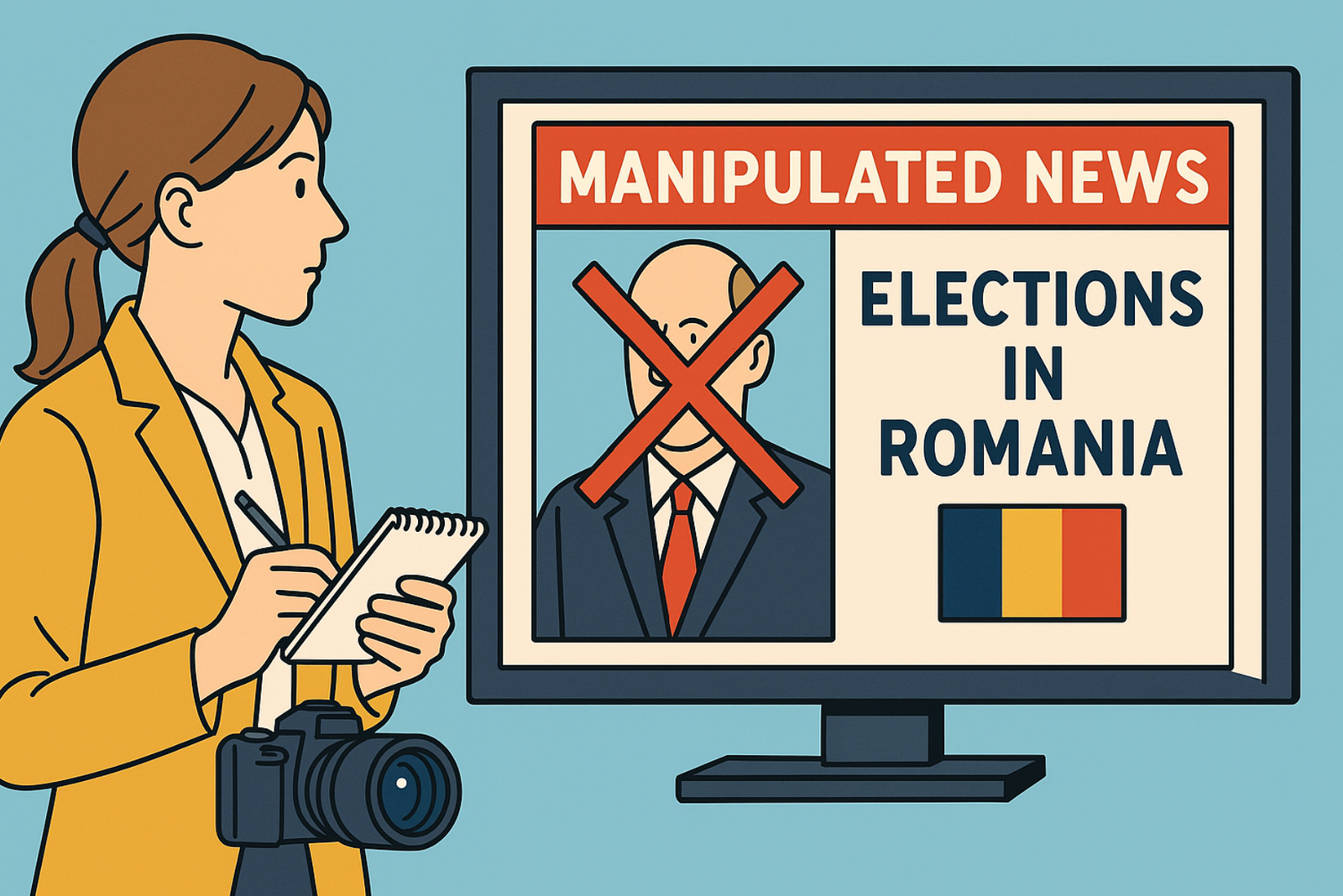

1. Scalability: AI can produce significant volumes of disinformation content in a short period of time, making it an ideal tool for influencing public opinion, manipulating elections, and undermining trust in the media.

2. Persuasiveness: Modern AI technologies, such as GPT (Generative Pretrained Transformer) for text, images, and Deepfakes for video, can create materials that seem real, making it difficult for users to distinguish true information from fake.

3. Targeting accuracy: AI can analyze large data sets to tailor disinformation to specific audiences, taking into account their preferences and vulnerabilities, which enhances the effect of manipulation.

4. Bypassing traditional barriers: AI is capable of circumventing filters and disinformation detection algorithms, adapting to countermeasures and constantly improving its ability to create more realistic content.

5. Economic efficiency: Automating the disinformation creation process reduces costs and makes it accessible to a wider range of actors, including state and non-state entities.

6. Anonymity and lack of accountability: Using AI to generate disinformation campaigns allows organizers to hide their involvement, complicating the process of holding them accountable.

In response to these threats, it is necessary to develop and implement advanced technologies for detecting AI-generated content, as well as to increase awareness and media literacy among the audience. Moreover, international cooperation is important for developing legal and ethical frameworks to regulate the use of AI in content creation.

Comments are closed.