In March 2025, a study was published that revealed extensive botnet activity in comments under Belarusian and international videos on YouTube. At that time, 85,736 comments on 1,132 videos left by 32,326 unique authors were analyzed, and it was discovered that less than 1% of accounts generated almost 12% of all comments, while 39% of videos were subjected to botnet attacks.

In the new study, the scope of analysis was expanded, processing nearly 100,000 comments from April 2025. To improve the efficiency and accuracy of bot detection, a hybrid RAG (Retrieval Augmented Generation) approach was implemented, which allows combining neural network analysis with search logic from its own knowledge base.

This approach takes into account various aspects of bot behavior, including:

- Behavioral patterns (commenting frequency);

- Template phrases and repetitive comments;

- Account name structure;

- Temporal patterns of activity;

- Network connections between bots.

Using RAG allowed creating a knowledge base of these patterns and more effectively identifying bots without the freezes characteristic of direct LLM API requests. This provided a more robust and scalable approach to comment analysis, which is especially important when working with large data sets.

The new analysis identified more than 13,000 comments classified as bots, and more than 3,600 bot authors. These data confirm the continuing activity of botnets in Belarusian YouTube and the need for further monitoring and counteracting such information attacks.

Methodology

The methodology represents a hybrid system combining:

- local heuristics (patterns, spam indicators, and tone),

- vector search (finding similar comments),

- LLM analysis using RAG (enriched with context and examples),

- caching (to speed up repeated calls),

- two-phase processing (sampling + bulk verification),

- automatic generation of reports and visualizations.

1. Preprocessing and local heuristics

Each comment is cleaned:

-

URLs, special characters, emojis are removed;

-

language detection is performed (ru, ua, by);

-

stop words are removed.

Then pattern analysis is applied:

-

template phrases, suspicious names, spam indicators are searched for;

-

each comment is assigned a

bot_scorefrom 0.0 to 0.9; -

if

bot_score >= 0.6, the comment is considered an “obvious bot” and is marked without LLM.

Pattern examples:

-

Phrases: “жыве беларусь”, “дзякуй за працу”

-

Names:

user-947,Ivan_1987 -

Spam: words like “заработок”, “биткоин”, “telegram”

2. Vectorization and RAG-search for similar comments

Remaining suspicious comments:

- are transformed into embeddings using SentenceTransformer;

- a search for nearest neighbors (top-k similar comments) is created for each based on cosine similarity;

- these examples are included in the prompt for LLM, enhancing the quality of analysis (this is RAG).

3. LLM (GPT) analysis with RAG

Each comment is analyzed through a prompt that includes:

- meta-information: author, text, heuristics (tone, patterns, spam),

- top-3 similar comments and their classifications,

- instruction: what to pay attention to (patterns, emotions, relevance, opinion),

- clear response format in JSON

If GPT doesn’t respond or returns an error — a weighted heuristic assessment (patterns + spam + tone) is used.

4. Architecture and optimization

-

Multithreading (

ThreadPoolExecutor) and batch processing (25 comments at a time) speed up the analysis. -

Cache (

diskcache) is used at the level of: embeddings, GPT responses, heuristic analyses. -

Initially, a selective training phase (10% of comments) is applied to speed up the mass analysis phase.

-

All results are saved in

.csv, and an interactive dashboard with visualizations is generated

Features and novelty of the approach

-

Using RAG with GPT for evaluating comments in Cyrillic languages;

-

Automatic enrichment of the pattern base, extracted from

bot_indicators; -

Hybrid logic: first maximally fast filters, then precise LLM analysis;

-

Reports for fact-checkers and analysts, suitable for publications and presentations.

Source data

Using YouTube API v3, over 104,000 comments were collected from 2,607 videos related to more than 100 pro-democratic channels. After cleaning, 97,870 comments were analyzed.

Classification results:

Created by bots: 13,777 (14.1%)

Created by humans: 84,093 (85.9%)

A table of author statuses was created: 32,293 authors, of which bots – 6,729, and humans – 25,564. Average number of comments per author: 3.03

Distribution by classification sources:

api: 95,218 comments, of which bots: 12,942 (13.6%)

cache: 2,640 comments, of which bots: 823 (31.2%)

pattern: 12 comments, of which bots: 12 (100.0%)

Analysis of bot scores (threshold = 0.5):

Average score: 0.208

Median score: 0.100

Comments with high score (>=0.6): 12,809 (13.1%)

Score distribution:

This graph demonstrates a bimodal distribution of bot scores (bot_score). The majority of comments have a low bot_score (around 0.1), which corresponds to regular users. However, there are significant peaks in the range of 0.6-0.9, indicating the presence of bots with a high degree of confidence. The red dotted line at 0.5 marks the classification threshold, above which a comment is considered to be created by a bot. This distribution confirms the effectiveness of the classification algorithm, clearly separating regular users and bots.

Key visualizations

Number of comments vs Bot scores:

This graph visualizes the relationship between the number of comments by an author and their bot score. Orange dots represent accounts classified as bots (bot_score >= 0.5), blue ones – as regular users. The size of the dot corresponds to the number of comments. It can be seen that most bots leave a small number of comments (up to 100), but have a high bot_score (0.6-1.0). However, there are also bots that have left hundreds of comments. The red dotted line marks the classification threshold (0.5). The graph shows that real users can be very active (up to 500+ comments), but their bot_score remains low, which confirms the reliability of the detection method.

Distribution of bots by scores and number of comments:

This graph shows the frequency distribution of bot_score values only for comments classified as bots (bot_score >= 0.5). It’s noticeable that the largest number of bot comments have scores around 0.7 and 0.85, forming two distinct peaks. This may indicate two different types of botnets or automated systems with different behavioral characteristics. The presence of comments with bot_score values close to 1.0 indicates that the system has identified comments with a very high probability of belonging to bots, which confirms the accuracy of the classification.

Bot authors who made the most comments:

This graph illustrates the 20 accounts that left the most comments classified as bot-like. The account @NataliaPetrova leads with approximately 250 comments, which significantly exceeds the activity of the next account on the list (@Dushman) with about 100 comments. There’s a clear color gradation that allows visually assessing the difference in activity. The top 3 accounts (@NataliaPetrova, @Dushman, and @АЗЪФРСССР) demonstrate particularly high activity, which may indicate their priority role in the botnet infrastructure and the need for special monitoring of their activities.

Relationship between number of comments and percentage of bots:

This graph shows the relationship between the total number of comments under a video and the percentage of comments from bots. The color scale reflects the number of bots. The red dotted line indicates the average percentage of bots (62.1%) across the entire sample. Interestingly, videos with fewer comments (up to 500) are subject to a higher percentage of bots (up to 90%), while popular videos with a larger number of comments (1500+) usually have a lower percentage of bots (about 20-35%). This may indicate a botnet strategy: they strive to dominate discussions of less popular videos where their influence will be more noticeable, and where it’s easier for them to “outshout” real users.

Videos most subjected to botnet attacks

The graph in this section presents the distribution of comments between bots (red bars) and humans (turquoise bars) for various videos. The videos most subjected to botnet attacks are those with political content, especially those featuring Lukashenko (“Lukashenko filed a lawsuit…”, “Lukashenko WENT OFF ON officials…”, “Lukashenko will be in tribunal…” etc.).

It’s interesting to note that videos with critical or negative content about Lukashenko were most heavily attacked by bots, where the percentage of bot comments reaches 40-50% of the total number. This indicates a targeted strategy of public opinion management – bots concentrate on videos with potentially negative narratives for the authorities.

Also notable is the video “Belarusians debate: why work…”, which has the largest absolute number of comments and a high proportion of comments from real people. This may indicate that socio-economic topics generate the greatest natural interest from the audience, making it harder for bots to dominate such discussions.

The video “What will now happen with the Baltic states…” also attracted significant attention from both bots and real users, indicating high interest in the region’s geopolitical issues.

Overall, the graph clearly shows that bots are concentrated on politically sensitive topics related to the Belarusian authorities, which confirms the hypothesis about the targeted use of botnets for manipulating public opinion in the Belarusian segment of YouTube.

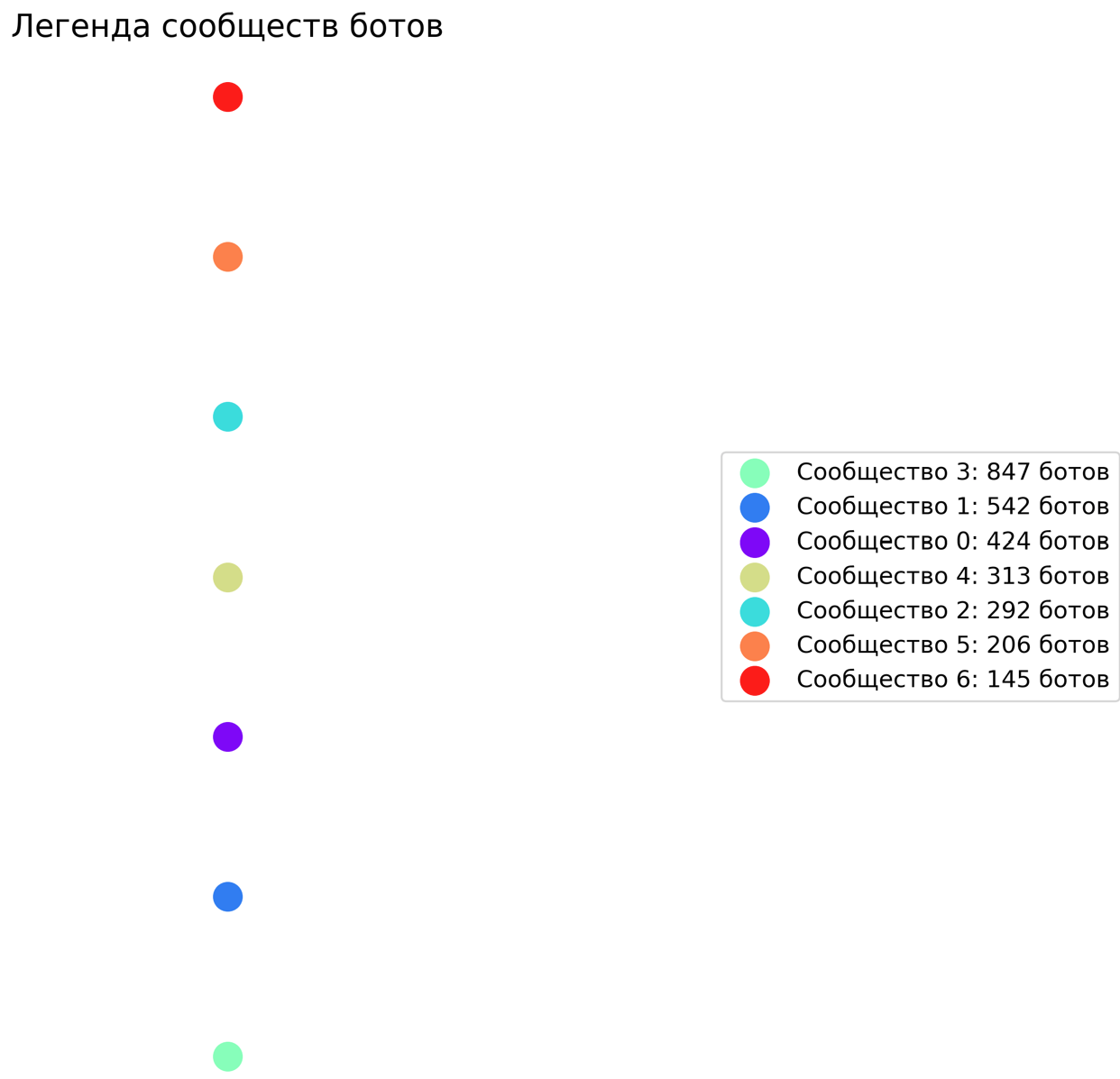

Bot network visualization

7 communities found

Top 5 largest communities:

Community 3: 847 bots

Community 1: 542 bots

Community 0: 424 bots

Community 4: 313 bots

Community 2: 292 bots

The analysis revealed a moderate but significant presence of bots (14.1%) in the sample of comments. Bots are mainly used to spread political messages and spam. Most active authors are real people.

The RAG-Bot Detector system showed high efficiency, successfully analyzing nearly 98,000 comments with a clear separation between bots and humans. Further expansion of the pattern base can accelerate future analyses.

The results indicate a significant but not critical level of manipulation of public opinion through YouTube comments, which requires continued monitoring and analysis.

This publication was developed by a research team under the leadership of Mikhail Doroshevich, PhD.